Data Driven Journalism – original post can be found here

Editor’s note

Between April and August 2011 the European Journalism Centre (EJC) circulated a survey on training needs for data journalism. We asked two members of our Editorial Board, experts in data journalism, journalist and trainer Mirko Lorenz, and journalism professor and trainer Paul Bradshaw, to analyse the results and share their insights with us. This article is an analysis of the survey results by Mirko Lorenz. On Thursday we will publish the analysis of the survey results by Paul Bradshaw. This second article in the series will be accompanied by the survey data.

Competency with numbers and statistics is a promising field. The assumption is that this competency would enable journalism to gain a greater level of depth and accuracy. But what are the training needs? How can we make this happen? The results of a survey ran by the European Journalism Centre provide some insights into this. Judging from the results of this survey, here is a run-down of the opportunities and challenges that lie ahead.

Data driven journalism on the rise

For the last two years there has been a growing interest in data driven journalism. The Guardian, The New York Times, The Texas Tribune, and The Los Angeles Times are now presenting new ways to look at data from different angles. This adds more clarity and often creates surprises. As a result these offerings are becoming increasingly popular, especially when there is chance to access raw data.

There are many unsolved questions however, regarding data analysis. How can journalists make better use of the numbers, avoid the frequent misinterpretation of statistics, check the reliability of the collected data, and present the facts in a simple yet accurate way in order to overcome pressing problems?

Results from the EJC survey on training needs for data journalism

In an attempt to discover better and more effective ways of training, the European Journalism Centre conducted a survey that ran from April to August. Roughly 200 journalists participated and about 134 of the total number of surveys were fully completed. After much anticipation, the results are finally in.

Subjects who took the survey were in some way familiar with the field of data journalism. Thus we can make no claims for representativeness. Nor are these insights sufficient for designing a training session that fully covers all aspects of data journalism. The answers to the 26 questions of the survey, however, will help you get a better grip on the sentiment, expectations and concerns that are out there.

Selected findings

Here is a brief list of the findings, based on the answers to the survey questions:

1. Opportunity

There is a growing group of journalists who are highly interested in further investigation of datasets. This opportunity of using new sources and new tools is like shining a light into the black boxes that surround us. Or, as a respondent put it: ‘Data can offer insights that contradict popular presumptions’.

2. Motivation

The argument as to what should be learned in order to be a good data journalist varies wildly. Some say that the future journalist should be a researcher, programmer, and designer, thus packing three university degrees into one. Judging from conversations and comments this is a scary perspective for many journalists. Gradually though, this ‘super-expert’ model is being brought down. One reason is because the use of the tools is getting easier. The barrier to coding is lowering and the techniques to write a few lines of code are becoming less complex. Another development is that by learning from good examples of data journalism one can tell that diligence, persistence and creative thinking are probably as important as formal knowledge.

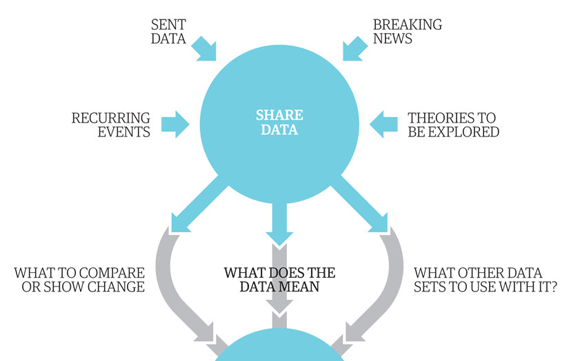

3. Use of data

What are the expectations? The journalists who participated see several ways of how data can be used. Firstly, they want to use data more to provide context, background and perspective. Secondly, they want to dig into the reliability of public claims – are they true, yes or no? What comes out as positive is that data journalism is more than just adding colourful pictures to a story. It allows for new perspectives to be uncovered thus giving more depth and shape to the overall picture.

4. Training needs

Where do journalists need support? What is interesting about the answers is that journalists effectively call for a systematic approach illustrating that how to analyse and how to visualise data are in high demand. Other actions, such as how to search and how to check reliability are viewed as important as well. Learning how to programme is notably low ranked…

5. Practical use

Seeing the potential of what datasets could do for newsrooms, it is clear that there is a demand for personal skills. Journalists want to be able to work with data themselves. While there should be experts available, they should assist existing staff and not keep their knowledge to themselves.

6. Barriers

Working on deadlines does not leave that much room to sit down and tinker with data for hours and days. But while lack of time was cited as one barrier to adopting data journalism, the more important barrier was clearly lack of knowledge. In combination with lack of resources and management support one can see why data journalism could benefit from systematic training.

Conclusion: Mind the gaps

Combining the sentiment from the survey with my own experience in preparing training modules for data driven journalism, the current challenge can be boiled down to three words: Mind the gaps.

1. Systematic approach needed: Misinterpretation of numbers and statistics is pretty common and journalists are quite often part of the problem. Wrongly extrapolating trends, misinterpretation of complex developments and lacking information are often encountered mistakes in journalistic discourse.

So, trainers and institutions in this field should be careful not to skip the very basics when working with numbers and statistics. There is a need for diligence and accuracy, not for bigger pictures.

2. Everybody, please move: Journalists have to learn, but publishers have to do their share too. Working with data can bring in new opportunities for publications, whether in pure print or multiple channels. Data, numbers, facts and context can create a solid base, if used correctly. Today the use of numbers often leads to sensationalism. Journalists sometimes add confusion when they do not take the time to investigate the data. While this may not be correct, it makes sense as long as the media remains mainly in the attention-getting business. But getting attention is no longer an exclusive product of media. There are many different channels that people can use to get their information. I would argue that today the scarce resource is trust. Data journalism used wrongly will only amplify attention for a short time and might have a reverse effect should it become clear that the analysis was faulty.

3. Do not mix three professions into one: It is true that the pioneers of data journalism often possess remarkable skills. They are journalists who know how to write code and produce webpages. Most of them trained themselves, driven by a curiosity to visualise unwieldy data in new ways. As things begin to move forward however, the idea of letting everyone do what they are best at might yield bigger gains. Does this mean journalists will be the facilitators of the process, asking questions and searching for data? Yes. Will these same journalists be tinkering with their publications content management system and producing jaw-dropping visuals just in time? Not likely. As data driven journalism moves on, there should be teams. The idea behind this being that a talented designer would assist the journalists in incorporating data into stories in a quick and improved manner.

These processes are still underway and the picture is incomplete at best. But the prospects are still enticing. What are your thoughts? Let us know.

Resources:

- Slides from presentation of preliminary results of EJC survey on training needs for data journalism

Yes, it feels good to talk about Twitter after spending a full week discussing Facebook’s major announcement. And why not, a little bit of Google+ too.

Yes, it feels good to talk about Twitter after spending a full week discussing Facebook’s major announcement. And why not, a little bit of Google+ too.

[ New York City evacuation zone map was shared with other parties to protect against heavy internet traffic taking down any individual site ]

[ New York City evacuation zone map was shared with other parties to protect against heavy internet traffic taking down any individual site ] [ Photo and graphic representation of Kepler’s field of vision ]

[ Photo and graphic representation of Kepler’s field of vision ] [ Recently discovered planet with two suns ]

[ Recently discovered planet with two suns ] [ Early visualization of density of relationships ]

[ Early visualization of density of relationships ]